Couchbase Exporter Setup (Prometheus)

- This tutorial walks you through installation and configuration of the Couchbase Exporter

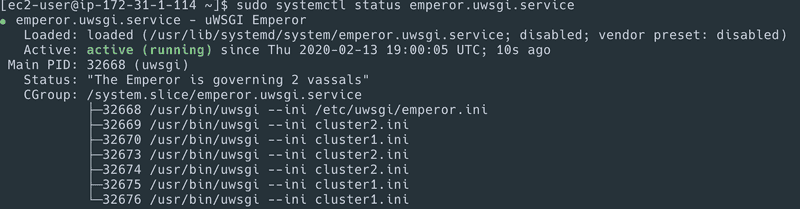

- Learn about setting up uWSGI with Emperor to execute multiple Cluster Monitoring Instances

The following will walk you through how to install and configure the Couchbase Exporter and its dependencies. Depending on which mode you want to run the Couchbase Exporter in, there will either be a single instance for each cluster, or an instance for each node in the cluster.

Download Couchbase Exporter

Download the Couchbase Exporter python code.

curl -L \

https://github.com/couchbaselabs/cbprometheus_python/tarball/master > \

couchbase_exporter.tar.gzCreate User

Create a Couchbase Exporter user, required directories, and make prometheus user as the owner of those directories.

sudo groupadd -f couchbase_exporter

sudo useradd -g couchbase_exporter --no-create-home --shell /bin/false couchbase_exporter

sudo mkdir /etc/couchbase_exporter

sudo chown couchbase_exporter:couchbase_exporter /etc/couchbase_exporterUnpack Couchbase Exporter Binary

Untar and move the downloaded Couchbase Exporter code

mkdir -p couchbase_exporter

tar -xzf couchbase_exporter.tar.gz \

-C couchbase_exporter --strip-components=1Install Python Dependencies

Python is required for the exporter to run, along with the uwsgi package.

pip is not packaged in official software repositories of CentOS/RHEL. The EPEL repository needs to be enabled.

CentOS

sudo yum install epel-releaseIf you're running in AWS you'll need to run:

sudo amazon-linux-extras install epelInstall pip

CentOS

sudo yum install python-pip python-devel gcc -yInstall uwsgi and flask using pip

sudo pip install -r ./couchbase_exporter/requirementsInstall Couchbase Exporter

Copy couchbase_exporter directory from couchbase_exporter folder to /opt/couchbase_exporter and change the ownership to the couchbase_exporter user.

sudo mv couchbase_exporter /opt

sudo chown -R couchbase_exporter:couchbase_exporter /opt/couchbase_exporterSetup ssh keys for cbstats

This step only needs to be performed if you are running the exporter in a cluster/standalone mode and wish to retrieve cbstats metrics. If you are running the exporter in local mode, this step is not required as the local version of cbstats is used.

This can be done a few ways. This example we will be creating a user for the exporter to use on the Couchbase nodes. You will need to have ssh sudo access to complete this step.

From the exporter:

ssh-keygen -t rsa -b 4096 -C "enter.user@domain.com"Enter file in which to save the key (/home/vagrant/.ssh/id_rsa): exporter

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in exporter.

Your public key has been saved in exporter.pub.

mv exporter* ~/.ssh

cat ~/.ssh/exporter.pubCopy the key to your clipboard

You can setup keys on each of the individual Couchbase nodes and the exporter

will connect to each node and run cbstats against that node. Or you can setup

the key on a single host in the cluster and use that node to access the other

nodes in the cluster. If you do the latter you have to set the CB_SSH_HOST

environment variable.

On each host the exporter will need to connect to:

sudo useradd -m -d /home/exporter -s /bin/bash -G couchbase exporter

sudo su

mkdir /home/exporter/.ssh

chown exporter:exporter /home/exporter/.ssh/

vi /home/exporter/.ssh/authorized_keysPaste the copied key into the authorized keys file

chmod 600 /home/exporter/.ssh/authorized_keys

chown exporter:exporter /home/exporter/.ssh/authorized_keys

exituwsgi Emperor

Emperor will maintain and execute multiple instances of uwsgi.

Create a directory for the uwsgi configuration files.

sudo mkdir -p /etc/uwsgi/vassalsCreate a new file for the emperor.ini

sudo vi /etc/uwsgi/emperor.iniAdd the following contents to the emperor.ini file

[uwsgi]

emperor = /etc/uwsgi/vassalsSet the appropriate permissions

sudo chown -R couchbase_exporter:couchbase_exporter /etc/uwsgiVassals (Cluster Monitoring Instances)

Create an ini file for each cluster that you wish to monitor.

sudo vi /etc/uwsgi/vassals/{{CLUSTER}}.iniReplace {{CLUSTER}} with a friendly name that contains no spaces i.e. (cluster1.ini).

Add the following contents to the file. Replace {{CLUSTER_HOSTNAME}} with the hostname of one of the Couchbase nodes in the cluster that you wish to monitor. Each exporter will need to run on a different port, it is recommended that you start with 5000 for {{PORT}} and increment by 1 (i.e. 5000, 5001, 5002, etc.)

CB_EXPORTER_MODE This can be "standalone" or "local".

CLUSTER- Friendly cluster name (no spaces). IfCB_EXPORTER_MODEis set tolocalthis value is changed to"localhost"CLUSTER_HOSTNAME- A comma-delimited list of one or more nodes (from the same cluster).CLUSTER_USERNAME- An RBAC user with Read-Only Admin as well as System Catalog Query PermissionsCLUSTER_PASSWORD- The Password for the RBAC userPORT- The port for the exporter to listen onCB_RESULTSET- Optional, used to limit the result size. Default is 60. For larger clusters or clusters with a high number of buckets/indexes, consider lowering this value.CB_CBSTAT_PATH- Optional, Used to state path to cbstats for non-default installations of CouchbaseCB_KEY- Required if intending to use cbstats in standalone mode from the exporter, path to private keyCB_SSH_USER- Required if intending to use cbstats from the exporter in standalone mode, username for private keyCB_SSH_HOST- Required if using cbstats in standalone mode and only connecting to a single host, ip address of that host.CB_NODE_EXPORTER_PORT- Optional, The port that node exporter is running on. The Couchbase Exporter can act as a proxy to Node Exporter, retrieving Node Exporter and adding labels with Couchbase Server information to the Node Exporter Metrics. Defaults to 9200.CB_PROCESS_EXPORTER_PORT- Optional, The port that process exporter is running on. The Couchbase Exporter can act as a proxy to Process Exporter, retrieving Process Exporter and adding labels with Couchbase Server information to the Node Exporter Metrics. Defaults to 9256.

[uwsgi]

http = :{{PORT}}

pidfile = /tmp/{{CLUSTER}}.pid

env = CB_DATABASE={{CLUSTER_HOSTNAME}}

env = CB_USERNAME={{CLUSTER_USERNAME}}

env = CB_PASSWORD={{CLUSTER_PASSWORD}}

env = CB_RESULTSET={{CB_RESULTSET}}

env = CB_CBSTAT_PATH={{CB_CBSTAT_PATH}}

env = CB_KEY={{CB_KEY}}

env = CB_SSH_USER={{CB_SSH_USER}}

env = CB_SSH_HOST={{CB_SSH_HOST}}

processes = 1

master =

chdir = /opt/couchbase_exporter/src

wsgi-file = /opt/couchbase_exporter/src/wsgi.py

enable-threads =Set the appropriate permissions on the file

sudo chown couchbase_exporter:couchbase_exporter /etc/uwsgi/vassals/{{CLUSTER}}.iniSetup Emperor Service

Configure emperor to run as a service by creating the following file:

sudo vi /usr/lib/systemd/system/emperor.uwsgi.serviceAdd the following configuration

[Unit]

Description=uWSGI Emperor

After=syslog.target

[Service]

User=couchbase_exporter

Group=couchbase_exporter

ExecStart=/usr/bin/uwsgi --ini /etc/uwsgi/emperor.ini

RuntimeDirectory=/opt/couchbase_exporter

Restart=always

KillSignal=SIGQUIT

Type=notify

StandardError=syslog

NotifyAccess=all

[Install]

WantedBy=multi-user.targetSet the appropriate permissions

sudo chmod 664 /usr/lib/systemd/system/emperor.uwsgi.serviceReload systemd and Start Emperor

Reload the systemd service to register the prometheus service and start the prometheus service.

sudo systemctl daemon-reload

sudo systemctl start emperor.uwsgi.serviceCheck the Emperor service status using the following command.

sudo systemctl status emperor.uwsgi.serviceConfigure Emperor to start at boot

sudo systemctl enable emperor.uwsgi.serviceIf firewalld is enabled and running, add a rule for port each exporter configured i.e 5000, 5001, etc.

sudo firewall-cmd --permanent --zone=public --add-port=5000/tcp

sudo firewall-cmd --reloadVerify the Exporter is Running

Verify the exporter is running by visiting the /metrics endpoint on the node on port 5000

http://<couchbase_exporter/emperor-ip>:5000/metrics/bucketsYou should be able to see something similar to the following:

...

ep_dcp_other_producer_count {cluster="Demo-6.0.3", bucket="demo", node="10.1.2.100", type="bucket"} 0 1581621651527

ep_dcp_other_producer_count {cluster="Demo-6.0.3", bucket="demo", node="10.1.2.100", type="bucket"} 0 1581621652528

ep_dcp_other_producer_count {cluster="Demo-6.0.3", bucket="demo", node="10.1.2.100", type="bucket"} 0 1581621653528

ep_dcp_other_producer_count {cluster="Demo-6.0.3", bucket="demo", node="10.1.2.100", type="bucket"} 0 1581621654527

ep_dcp_other_producer_count {cluster="Demo-6.0.3", bucket="demo", node="10.1.2.100", type="bucket"} 0 1581621655527

ep_dcp_other_producer_count {cluster="Demo-6.0.3", bucket="demo", node="10.1.2.100", type="bucket"} 0 1581621656528

ep_dcp_other_producer_count {cluster="Demo-6.0.3", bucket="demo", node="10.1.2.100", type="bucket"} 0 1581621657528

...Clean Up

Remove the download and temporary files

rm -rf couchbase_exporter*If you wish to add another cluster in the future, repeat Step 4.7 and restart the emperor service.